Powers and Perils of Language Technologies

Language Technology (conversational AI, text analysis, speech recognition, text generators, summarises) has taken the world by storm. It has begun to be used largely in customer service, healthcare, and elsewhere.

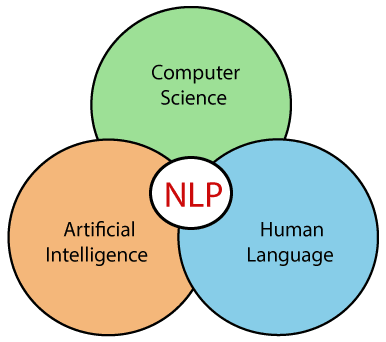

At the heart of these technologies lies natural language processing which is a branch of artificial intelligence that deals with the interaction between computers and humans using natural language. The ultimate objective of NLP is to read, decipher, and make sense of human languages in a manner that is valuable. This post is going to take a deep dive into language technologies to see if they can ever parallel the abilities of human language processing.

Alexa, Siri, Google Assistant..how do they work?

While there has been immense progress in language technologies over the years, paralleling human language processing levels still remains a long shot.

The characteristics of human language that makes NLP challenging are discussed below:

- Productive ( infinite set of possible expressions )

Human language processing involves the construction and interpretation of new signals that have not been already faced and that have not been pre-programmed

- Generative / Recursive

Given the infinite set of possible systems, language systems need to be built in a way to be able to generate/store infinite numbers of expressions

- Duality

The languages of human beings have two levels: minimal units the phonemes for speech and the alphabet for writing– which do not have a meaning on their own and producing speech, with a combination of such sounds. One is capable of producing a very large number of sound combinations (e.g. 8 words) which are different in meaning with only a limited set of different sounds.

- Displacement

features of language that refers to the capability to speak not only about things that happened at the time and place of talking but also about other situations, real or unreal, past or future.

- Arbitrariness

Words have no principles and systematic connections with what they mean. One has to know a specific language in order to understand the arbitrary word.

Now, let us take a look at how natural language processing works for action laden contexts

Natural Language Processing in Action:

“Billy hit the ball over the house.”

Categories of Information Extracted

- Semantic information: information that contains meaning

person (BILLY ) — the act of striking an object with another object (HIT )— spherical play item (BALL) — a place people live (HOUSE)

- Syntax information: arrangement of words in sentences, clauses, and phrases

subject (BILLY)— action ( HIT) — the direct object (BALL) — the indirect object (HOUSE)

- Context information: this sentence is about a child playing with a ball

Each type of information, taken in isolation, isn’t very helpful by itself. They indicate a vague idea of what the sentence is about, but full understanding requires the successful combination of all three components which human language processing accomplishes.

Natural Language Processing attempts to teach computer systems to identify basic rules and patterns of language. In many languages, a proper noun followed by the word “street” probably denotes a street name. Similarly, a number followed by a proper noun followed by the word “street” is probably a street address. And people’s names usually follow generalized two-or three-word formulas of proper nouns and nouns.

Unfortunately, recording and implementing language rules takes a lot of time ( given the immense complexity of human languages explained above). What’s more, NLP rules can’t keep up with the evolution of language. The Internet has butchered traditional conventions of the English language. And no static NLP codebase can possibly encompass every inconsistency and meme-ified misspelling on social media.

This is a lot for language systems to account for and the rest of the blog is going to focus on the progress till today, unanswered questions, and inherent roadblocks (e.g. universal grammar ) in the quest to parallel the human level of processing. Will Language Technologies ever catch up to Human Language Processing?

Evolution of Language Processing Systems:

Rule-Based Systems: Symbolic NLP (the 1950s — early 1990s)

- hand-crafted system of rules based on linguistic structures ( syntax, semantics) that imitates the human way of building grammar structures.

- tend to focus on pattern-matching or parsing

- can often be thought of as “fill in the blanks” methods

Some Examples:

A) Rule-Based Sentence Parsing — Part of Speech Tagging

( E.g English has the structure of SVO (Subject Verb Object), Hindi has SOV (Subject Object Verb)).

B) Rules-Based approaches use thesaurus and lists. Data sources such as Wordnet are very useful for deterministic rule-based approaches

C) Rule-Based Sentiment Analysis

- Define lists of polarized words. (lists of positive and negative words or sentiment library)

- Count the number of positive and negative words that appear in the text.

positive > negative : Text is Positive

negative > positive: Text is Negative

positive = negative : Text is Neutral

Machine Learning (the 1990s — 2014 ) — Statistical Methods

There’s a famous quip by Frederick Jelinek about speech processing:

“Every time I fire a linguist, the performance of the speech recognizer goes up”

Machine learning takes a probabilistic approach by using historical data and outcomes. It considers not only the input but n number of other factors. Machine learning understands patterns and trends in historic data and gives you the probability of different outcomes based on that.

Examples:

Stochastic Part-of-Speech Tagging

Problem: words are ambiguous — have more than one possible part-of-speech — and the goal is to find the correct tag for the situation.

calculate the probability of a given sequence of tags occurring. This is sometimes referred to as the n-gram approach, referring to the fact that the best tag for a given word is determined by the probability that it occurs with the n previous tags.

predict p(w | h) — what is the probability of seeing the word w given a history of previous words h — where the history contains n-1 words.

However, this simplistic n-gram approach is not feasible when considering a large corpus of texts as it would entail sifting through the entire text for each word encountered. A solution to this is the bigram model, based on Markov models.

Markov models are the class of probabilistic models that assume that we can predict the probability of some future unit without looking too far in the past. In practice, it’s more common to use trigram models, which condition on the previous two words rather than the previous word, or 4-gram or even 5-gram models when sufficient training data is available.

A major critique of the ML system is that it lacks an understanding of language phenomenon and handles text in a naive way. Understanding the semantic meaning of the words in a phrase remains an open task. Furthermore, while statistical methods are an improvement from the rule-based approaches, they are still far from achieving human language processing capabilities. Several limitations include the need for large amounts of data and lack of productivity to incorporate out-of-vocabulary (OOV) words ( input includes words that were not present in a system’s dictionary or database during its preparation). Perhaps if the goal is to create language technologies that are comparable to human language processing capabilities, the statistical/probabilistic approach may be the wrong path to take.

Another recent trend… Deep Learning & Artificial Neural Networks

Connectionism is the theory underpinning ANN. It is an approach in the field of cognitive science that hopes to explain mental phenomena using artificial neural networks (ANN).

Basic Approach of Connectionism:

- cognitive modeling based on the kind of computations carried out by the brain

- basic operation involves one neuron passing information to other neurons related to the sum of the signals it receives

- Information about an input signal or memory of past events is distributed across many neurons and connections

Artificial Neural Network ( ANN) is a piece of computing system designed to simulate the way the human brain designs and processes information

Connectionist Network:

- Set of units ( neurons )

- the specific pattern of connectivity

- Connections are directed

- Connections are associated with strength ( weight )

- Each unit has a level of activation

- Hidden layer: does something with its input and weights from the previous layer, applies a non-linearity to it, and then it’s going to send the output to the next layer. Which operations are applied depends on the type of neural network

Training Phase

The aim of the training phase is to learn to recognize patterns in data and consists of Supervised and Unsupervised methods

- Supervised Methods ( specific and known input-to-output mapping is required). “ Training examples” of inputs are provided corresponding to target outputs * difference is adjusted based on backpropagation( network works backward, going from the output unit to the input unit to adjust the weight of its connections until the difference between the desired outcome and actually produces a lowest possible error

- Unsupervised Methods ( makes the network adapt to aspects of the statistical structure of input examples without mapping to target outputs (e.g., the discovery of regularities in the phonological structure of language). These networks are well-suited to uncovering statistical structure present in the environment without requiring the modeler to be aware of what the structure is

Emergent linguistic structure in artificial neural networks trained by self-supervision

( D. Manning,Clark,Hewitt, Khandelwal, Levy, 2020 )

Unlike statistical methods, ANN is able to gain knowledge of linguistic structures like syntax and coreference. In the paper cited above, Bidirectional Encoder Representations from Transformer (BERT) was analyzed and found that BERT was able to learn the hierarchical structure of language.

The self-supervision task used to train BERT is the masked language-modeling or close task, where one is given a text in which some of the original words have been replaced with a special mask symbol. The goal is to predict, for each masked position, the original word that appeared in the text ( fig 1). To perform well on this task, the model needs to leverage the surrounding context to infer what that word could be.

Training of this model involves just a list of words as their input and does not explicitly represent syntax.

English subject-verb agreement requires an understanding of hierarchical structure where the form of the verb ( singular/plural) depends on whether the subject is singular or plural. While statistical systems could learn statistics about sequential co-occurrence, subject-verb agreement is not based on the linear order of words but on the word’s syntactic relationship.

To correctly perform the word form prediction task, the model must ignore the attractor(s) (here, pizzas) and assign the verb’s form based on its syntactic subject (chef), in accord with the sentence’s implied hierarchical structure rather than linear proximity. Artificial neural networks have been shown to perform well at such tasks.

These models seem promising for the growth of the field of NLP as this would enable models to have some sort of language acquisition and understanding similar to that of humans.

(Gulordava, Bojanowski,Grave,Linzen,Borani, 2018) did a study on RNN ( recurrent neural networks ) in linguistic processing tasks, and to what extent RNNs learn to track abstract hierarchical syntactic structures. The study was conducted in 4 languages ( Italian, English, Hebrew, and Russian) to predict long-distance number agreement in various constructions. They included in their study, nonsensical sentences where RNNs could not rely on semantic or lexical cues. For example, one minimal pair is: “The colorless green ideas I ate with the chair sleep furiously”.(here, the plural verb variant with idea is the grammatical one). The model, without further task-specific tuning, is asked to compute the probability of the two variants, and it is said to have produced the correct judgment if it assigns a higher probability to the grammatically correct one.

In all cases, neural networks display a preference for grammatically correct sentences that is well above chance level and competitive baselines (the lowest performance occurs in English, where the network still guesses correctly 74% of the cases, where the chance level is at 50%). Moreover, for Italian, they compare the network performance with human subjects taking the same test. Human accuracy turns out to be only marginally above that of the network (88.4% versus 85.5%).

Furthermore, Gulordava’s non-sensical twist strips off possible semantic, lexical, and collocational confounds, focusing on abstract grammatical generalization. The paper demonstrates that RNNs go beyond being shallow pattern extractors but acquire deeper grammatical competence.

These unsupervised learning tasks seem to suggest that the study of language by linguists, the formal rules, patterns, grammar, and theories are irrelevant to NLP systems that are able to come up with their own generalizations.

This is a startling and intriguing result. Traditionally much of the emphasis in NLP has been on using labels for part of speech, syntax, etc., as an aid in other downstream tasks. This result suggests that large-scale manual construction of syntactically labeled training data may no longer be necessary for many tasks. Despite its simple nature, the generality of word prediction, as a task that benefits from syntactic, semantic, and discourse information leads to it being a very powerful multidimensional supervision signal.

A success story: Meena

- Meena is the first Open Domain Chatbot

*Open-domain question answering deals with questions about nearly anything, and can only rely on general ontologies and world knowledge

- trained on end-to-end data that is minced and filtered from public domain social media conversations

Meena Built of Sequence2Sequence Model with an Evolved Transformer ( ET ) that includes 1 ET encoder block and 13 ET decoder block

- seq2seq Model: takes a sequence of items (words, letters, time series, etc) and outputs another sequence of items

The measure of Human Likeness ( Human — Evaluation Metric)

(Sensibleness and Sensitivity Average ( SSA ) )

- making sense ( logical output)

- being specific ( given the context, avoiding generic responses )

Meena is the first successful attempt in building an end-to-end open-domain chatbot. The results are very promising. Perhaps, passing the Turing Test is within our grasps?

Turing Test : Turing proposed that if the subject cannot tell the difference between the human and the machine, it may be concluded that the machine is capable of thought.

“Man alone of the animals possesses speech.” Words, in other words, are key to what makes us special — and our intellect unique — relative to other species, Aristotle

Language does not only involve speech processing and the parsing of sentences but the integration of various cognitive faculties, one’s context, memories, emotions, and preferences. There is still a significant gap to fill for language technologies before they can parallel human language processing.

Above, the sentence “I love coffee”, is our example. If you picked a note off a desk and read this, would there be any way to split apart the letters, all three words, and few syllables to eventually guess that I like a light roast coffee because it contains the most caffeine for my brew style? It would never happen. Too much information is completely lost. A computer could only ever hope to ballpark it if they had a working knowledge of the context of the situation.

Another ROAD BUMP... THE NATIVIST POSITION

Internal Representations and I-Language

Infants must possess innate, language-specific knowledge or learning mechanisms because otherwise, language acquisition in the absence of negative evidence would be impossible ( Chomsky, 1965; Gold, 1967; Pinker, 1989; among many others).

Two Key Arguments underpinning the Nativist Position(1994, Jackendoff):

A) The argument for Mental Grammar

An expressive variety of language use implies that a language user’s brain contains a set of unconscious grammatical principles

B) The Argument for Innate Knowledge

Child language acquisition implies that the human brain contains a genetically determined specialization for language.

Chomsky’s view is that what is special about human brains is that they contain a specialized ‘language organ,’ an innate mental ‘module’ or ‘faculty,’ that is dedicated to the task of mastering a language. He believes the language faculty contains innate knowledge of various linguistic rules, constraints, and principles; this innate knowledge constitutes the ‘initial state’ of the language faculty.

In interaction with one’s experiences of language during childhood — that is, with one’s exposure to what Chomsky calls the ‘primary linguistic data’ it gives rise to a new body of linguistic knowledge, namely, knowledge of a specific language (like Chinese or English). This ‘attained’ or ‘final’ state of the language faculty constitutes one’s ‘linguistic competence’ and includes knowledge of the grammar of one’s language.

Nativists assume that the lexical categories, or semantic features to which these categories relate, are innately specified in the learner, and individual words’ membership to these categories is then determined from exposure to the language

Final Words

Language Technologies have come a long way. From naive rule-based systems to statistical machine learning algorithms and the artificial neural networks of today. Human language processing is complex, and linguists are still trying to understand the nuts and bolts. The nativist camp, which claims that there exists a universal grammar and innate language-learning mechanism, if true, could be a major setback for language technologies. Unlike other discrete domains like quantitative skills, speed, accuracy, language processing is a multi-domain process, engaging several cognitive systems and may be difficult to accomplish by discrete mechanisms. Perhaps only when we have a full understanding of human language processing, will language technologies be able to parallel human language processing.

CITATIONS:

- Koehn P (2010) Statistical machine translation. Cambridge University Press, Cambridge https://www.biorxiv.org/content/10.1101/2020.07.03.186288v1.full.pd

- Emergent linguistic structure in artificial neural networks trained by self-supervision, Christopher D. Manning, Kevin Clark, John Hewitt, Urvashi Khandelwal, Omer Levy. Proceedings of the National Academy of Sciences Dec 2020, 117 (48) 30046–30054; DOI: 10.1073/pnas.1907367117

3. Martin Everaert, Marinus Huybregts, Noam Chomsky, Robert Berwick, and Johan Bolhuis. 2015. Structures, not strings: Linguistics as part of the cognitive sciences. Trends in Cognitive Sciences 19(12):729– 743.

4. Gulordava, Kristina et al. “Colorless Green Recurrent Networks Dream Hierarchically.” Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language

Technologies, Volume 1 (Long Papers) (2018): n. pag. Crossref. Web.

5. Daniel Adiwardana, Minh-Thang Luong, David R. So, Jamie Hall, Noah Fiedel, Romal Thoppilan, Zi Yang, Apoorv Kulshreshtha, Gaurav Nemade, Yifeng Lu, Quoc V. Le. 2020. Towards a Human-like Open-Domain Chatbot

6. R. Jackendoff _1994_ Patterns in the Mind_Chapter 1–3

7. P.N. Johnson-Laird _1988_ The Computer and the Mind, Harvard University Press, chapter 10, pp.174 -194

8. Connectionism Lecture Slides ( from Class )

9. Google AI Blog, January 2020 https://ai.googleblog.com/2020/01/towards-conversational-agent-that-can.html